Project Implementation Overview

Click the arrow mark to watch the video in full screen

Before diving into UI/UX design, I needed to deeply understand how VR and AR systems work. This meant: Wearing VR/AR headsets multiple times to analyze navigation schemes, user comfort, and interaction dynamics. Studying existing UI interface functionalities and interaction patterns in immersive environments. Collaborating closely with researchers and engineers to understand their specific interface needs. From this, I iteratively created low-fidelity wireframes, continuously refining them based on user feedback and usability testing. The interface needed to accommodate: Mode selection (various tracking and interaction modes). Navigation through available digital twins and their real-time data. Playback of recorded sessions from VR operations. Account management & access controls for different users. Camera view selection and dynamic scene adjustments. Quick-access shortcuts to enhance workflow efficiency.

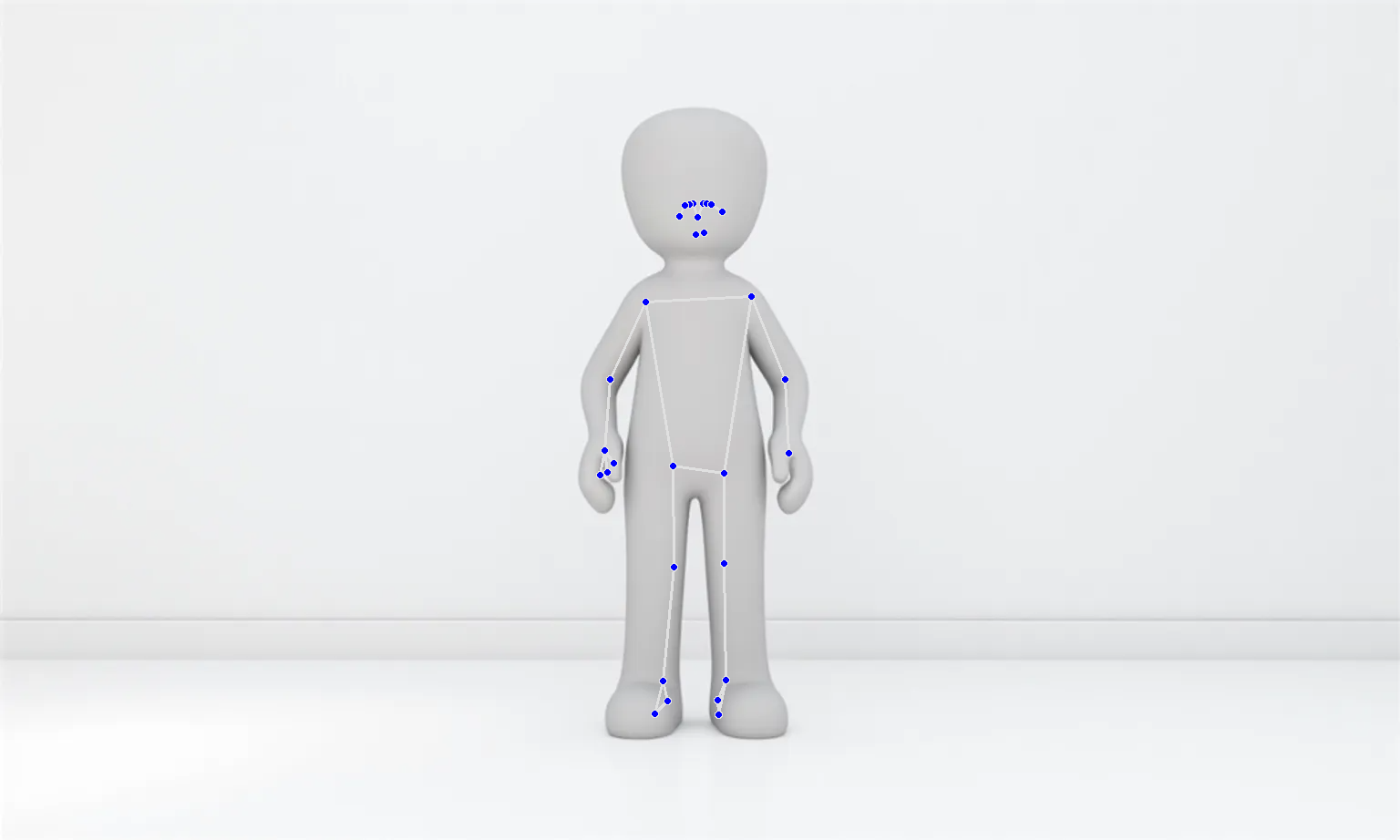

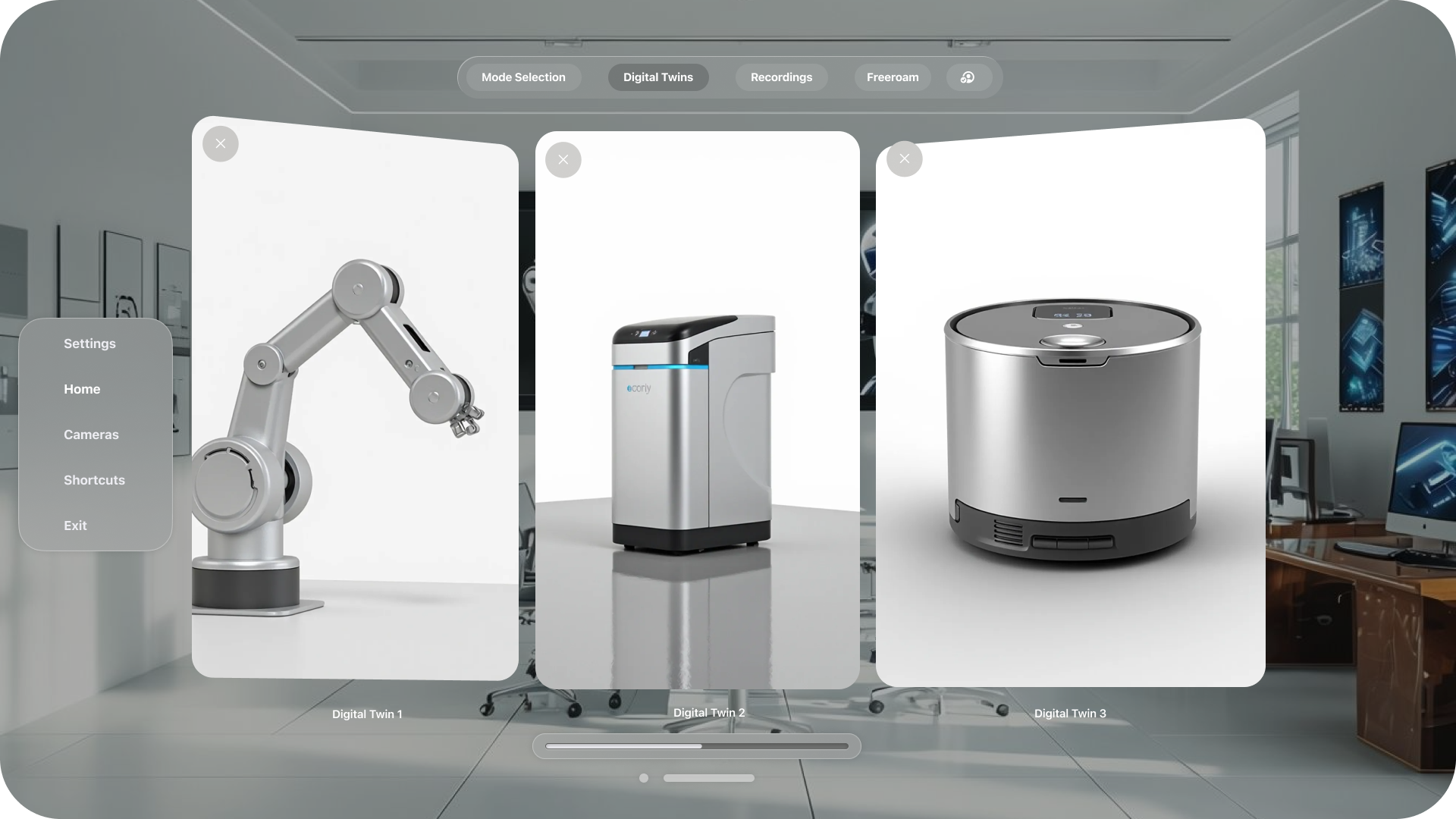

After validating the low-fidelity designs, I proceeded to create high-fidelity UI/UX wireframes using Figma, incorporating: Digital assets and UI elements designed in Adobe Illustrator & Photoshop for a visually immersive experience. Seamless transitions and interactions ensuring an intuitive VR-ready UI. Human pose tracking data integration, where I ran AI models on digital elements and embedded them directly into the interface. As seen in the project video, the UI seamlessly transitions between multiple functional modes, including: Pose Detector Mode – Identifies human skeleton positioning in real time. Pose Tracker Mode – Continuously tracks human movement across the workspace. Heatmap Mode – Visualizes high-movement or high-stress areas for analysis. Interactive Mode – Allows users to manipulate digital elements based on their tracked movement. Digital Twin Mode – Displays and controls robotic arms, objects, and people within the scene. Each mode required a tailored UI layout to optimize clarity, usability, and functionality in an immersive setting.

This project was one of the steepest learning curves in my journey so far, as it required me to bridge two complex domains—Computer Vision and UX Design—while working with a cross-functional team. Beyond just the technical skills, this experience taught me the value of translating technical research into an accessible and usable interface. I gained a deep understanding of how to structure immersive experiences, balancing technical constraints with user needs. More importantly, I developed a valuable skillset in interpreting project requirements and delivering exactly what’s needed—both functionally and aesthetically.